Substantiated findings

LLM’s are great at organizing narratives and findings. It's helpful to see the sources that support these conclusions, making it easier to understand the analysis and where it comes from.

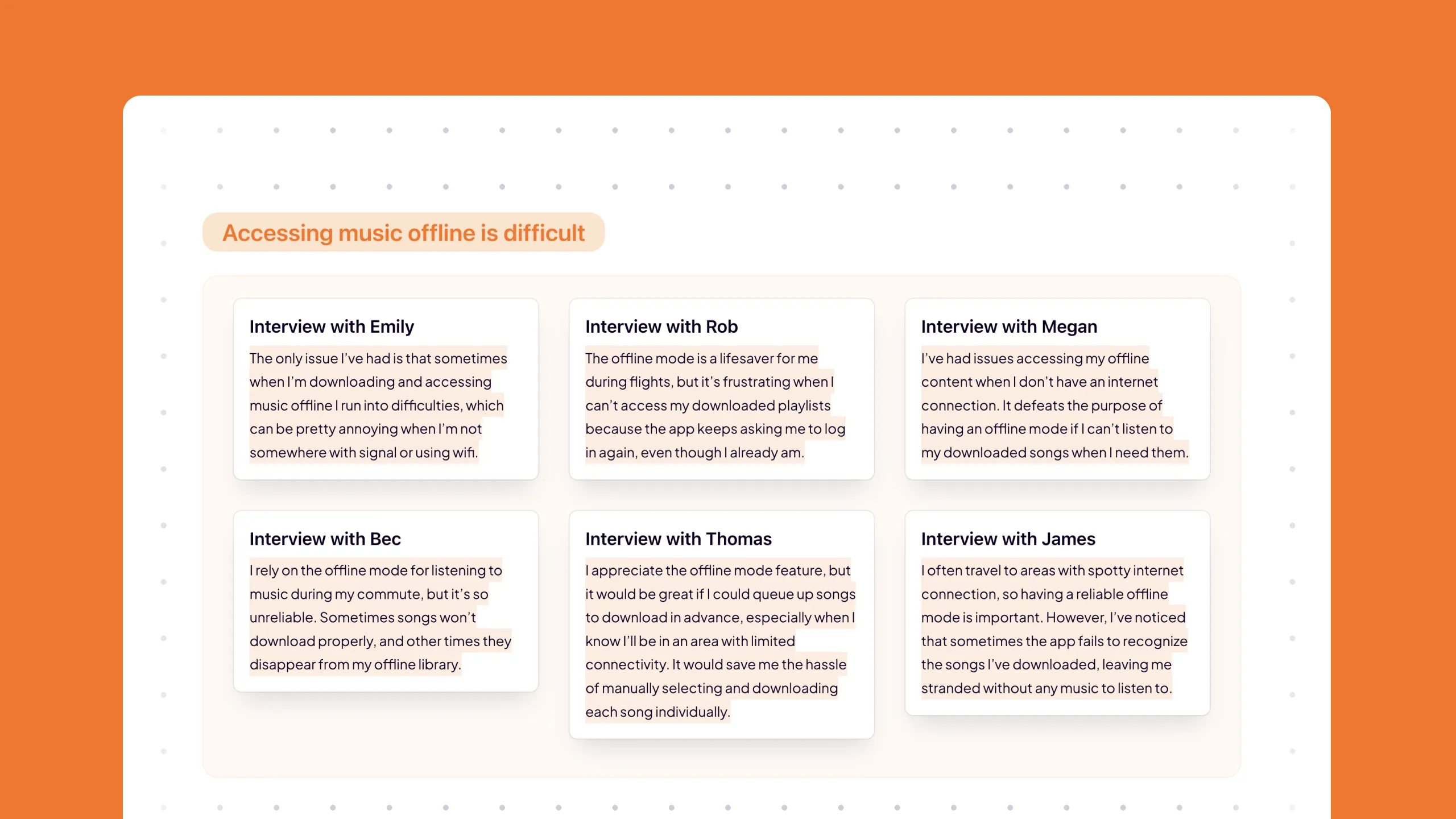

When reviewing findings, I want to see the supporting sources, so I can understand and trust the conclusions more easily, and see how ideas are substantiated.

- Comparing Similarity in Source Materials: Seeing a collection of similar information helps you compare similarity between the source materials.

- Building Trust through Access to Original Sources: Access to original sources builds trust in the findings, as users can review and understand the basis of the conclusions.

More of the Witlist

Realtime generation allows people to manipulate content instantly, giving them more agency in using generative AI as a tool for exploration.

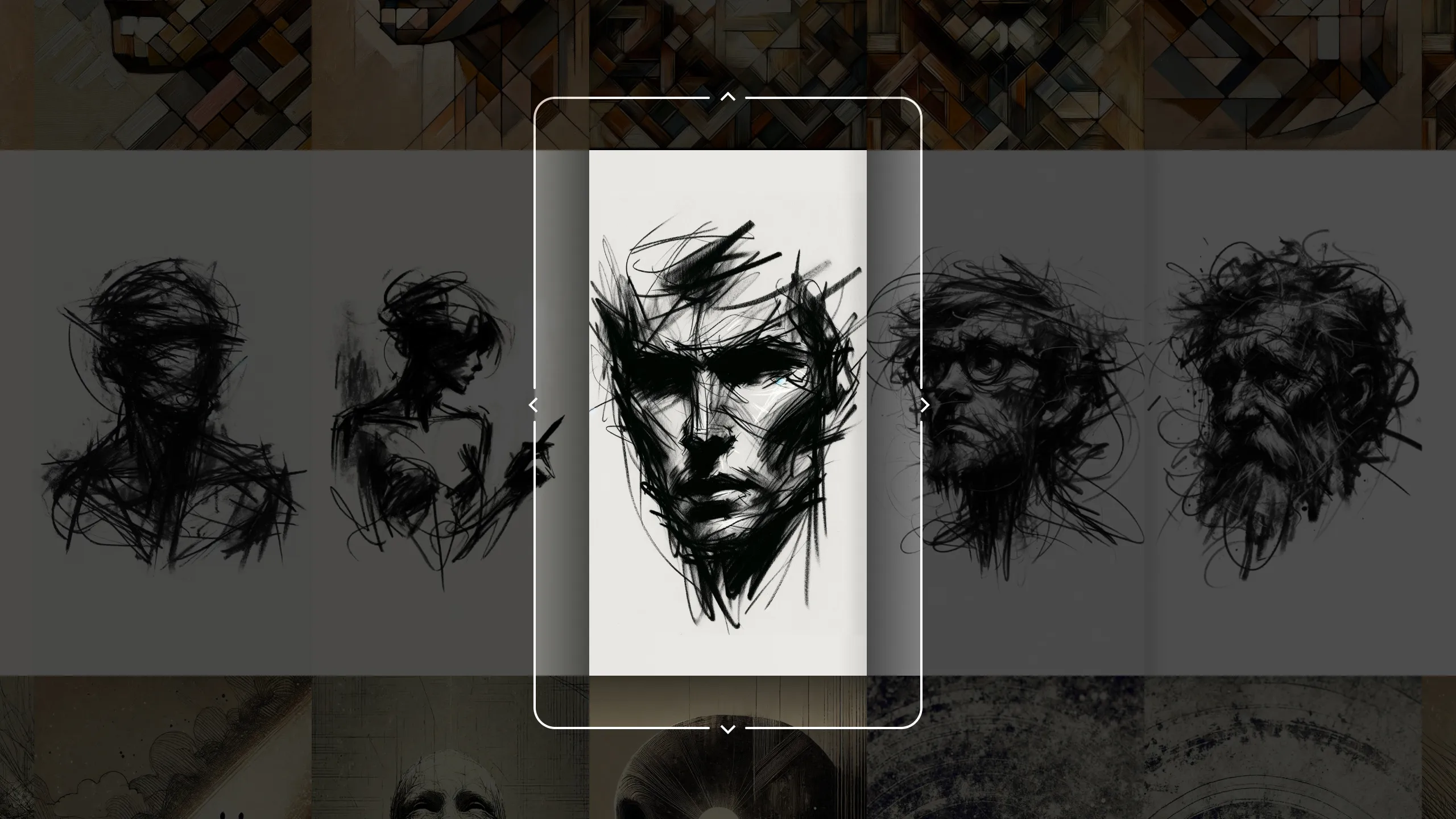

Ordering content along different interpretable dimensions, like style or similarity, makes it navigable on x and y axes facilitating exploration and discovery of relationships between the data.

Generative AI can provide custom types of input beyond just text, like generated UI elements, to enhance user interaction.

AI excels at classifying vast amounts of content, presenting an opportunity for new, more fluid filter interfaces tailored to the content.

Embedding models can rank data based on semantic meaning, evaluating each individual segment on a spectrum to show its relevance throughout the artifact.

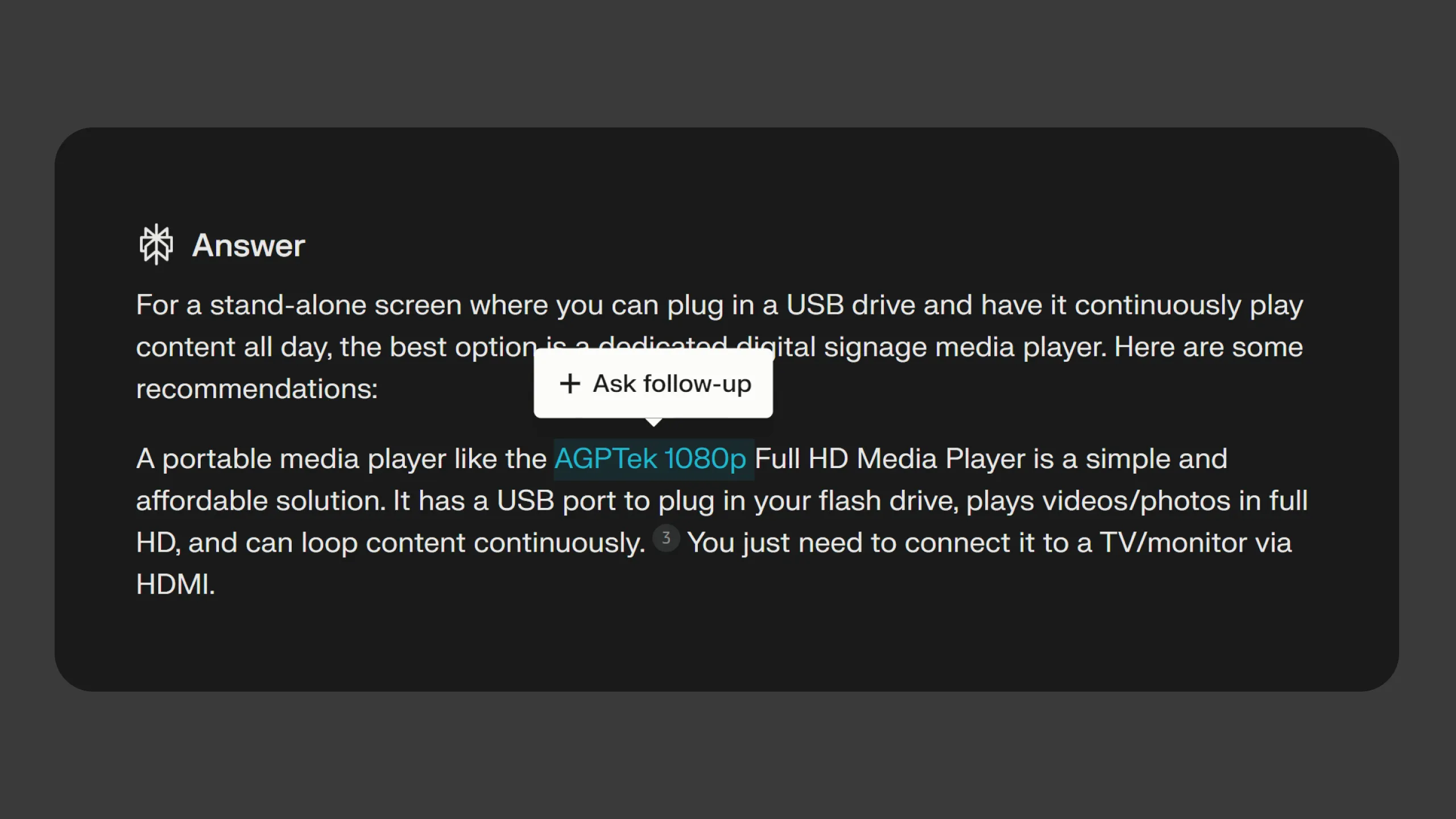

Letting people select text to ask follow-up questions provides immediate, context-specific information, enhancing AI interaction and exploration.